A Natural Language Query Dashboard on Your SAP S4/HANA Data

Summary

- Retrieval Augmented Generation (RAG) allows for adding knowledge from external sources into a Large Language Model (LLM) prompt.

- In this blog post I will be using this approach for using an LLM to retrieve data from an SAP S4/HANA system and visualizing the data using Streamlit.

- I will demonstrate how to build a dashboard converting natural language questions into data visualizations using only a minimal amount of code.

Introduction

In previous posts I have shown ways to utilize LLMs for data extraction by performing natural language queries from SQL databases using Langchain and vanilla GPT-3.

Today I will be using yet another framework for extracting tabular data from SQL databases in the form of LlamaIndex. This type of retrieval is called Retrieval Augmented Generation (RAG), which augments a prompt to an LLM with information retrieved from other sources. These sources can for instance be external documents from corporate knowledge bases or relational databases, the latter being the topic of this blog post.

One of the caveats with the Langchain-based solution described here is the fact that the table and column names from that solution were all descriptive, meaning that the LLM was able to devise queries based on common understanding of the terminology in the database schema itself. Unfortunately, as a reader of that post has emailed me about, standard practice in many enterprise systems is that table names are definitely not as clean as in that specific example. A system I have experience with that springs to mind is the SAP S4/HANA (previously called ECC) system which uses 4-character mnemonics for both table and column names for many of its core tables. An important part of this system’s database schema originates from the 1970’s when space for storing even the database schema itself was very limited. It will be interesting to see how far an LLM will get with a database schema set up like this.

Setup

Some initial setup is required to configure parameters to your database and OpenAI. First checkout the repository at https://github.com/kemperd/s4hana-dashboard.

Create a Python environment using Python 3.10 and install requirements using: pip install -r requirements.txt

The solution will be using SQLAlchemy for connecting to your database. See https://docs.sqlalchemy.org/en/20/dialects/index.html for more information on connecting to your specific product. This may also require modifying the database URL and installing a database-specific package.

Now copy .env.example to .env and modify the file according to your database parameters. Make sure to configure your OpenAI API key and organization settings which you can find on https://platform.openai.com under the “View API keys” menu.

Connecting to the database

The database connection is set up using SQLAlchemy, which has various drop-in packages for connecting to your specific database product. I will be using a connection to SAP HANA which is offered by the sqlalchemy-hana package. Connection can now be made using a database URL:

engine = create_engine("hana+hdbcli://{}:{}@{}:{}".format(username, quote(passwd), hostname, port))

Now a SQLDatabase object needs to be instantiated to connect LlamaIndex to the database:

sql_database = SQLDatabase(engine, include_tables=['ekpo'])

This instructs LlamaIndex to only regard the EKPO table and retrieve the details of its schema. If this is omitted, LlamaIndex will try to retrieve the full database schema which is very large in the case of S4/HANA.

Performing queries

At this point we are able to execute some natural language queries to the database. I have defined the below helper function for this, which also includes a prompt template to guide the LLM the way it returns its results. The following considerations have been made:

- The model needs to only return the data and no additional descriptions

- The data needs to be formatted in CSV format with column headers

- Sometimes the model tries to generate a SQL export statement to have the database export the resultset to CSV, this is explicitly forbidden

The idea is to convert the CSV output into a Pandas DataFrame later on. This could also have been JSON format, but the CSV output was more lightweight given the limitations on token usage.

def query(query_string):

query_engine = NLSQLTableQueryEngine(

sql_database=sql_database,

tables=["ekpo"],

)

query_template = '''{}.

Present the output in CSV format with headers.

Do not generate SQL statements for exporting to CSV.

Do not provide additional descriptions apart from the data.

Always display a header row containing the column descriptions.

'''.format(query_string)

response = query_engine.query(query_template)

return response

Query examples

At this point it is sufficient to call the query() function with your natural language prompt to have LlamaIndex retrieve the results from the database. To access the query results do the following:

response = query('How many purchase orders are there?')

print(response.response)

The generated SQL can be accessed as follows:

print(response.metadata['sql_query'])

Some query examples are shown below:

How many purchase orders are there?

| Results | SQL |

|---|---|

Number of Purchase Orders212 |

SELECT COUNT(*) AS "Number of Purchase Orders"FROM ekpo |

Sum up the gross value of purchase orders

| Results | SQL |

|---|---|

GrossValue34864082432.82 |

SELECT SUM(brtwr) AS GrossValueFROM ekpo |

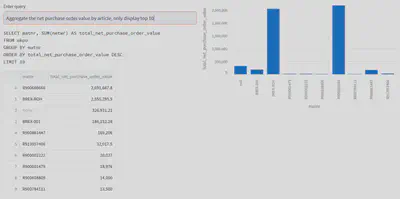

Aggregate the net purchase order value by article, only display top 10

| Results | SQL |

|---|---|

matnr,total_net_purchase_order_value R900666666,2691667.8 BREX-ROH,2555295.9 ,326931.21 BREX-001,186152.28 R900861447,169206 R913057406,32017.5 R900002222,20027 R900001479,18976 R900608809,14000 R900784111,13500 |

SELECT matnr, SUM(netwr) AS total_net_purchase_order_value FROM ekpo GROUP BY matnr ORDER BY total_net_purchase_order_value DESC LIMIT 10 |

Notice that the LLM seems to possess a form of built-in knowledge of the EKPO table from this SAP system. You’ll notice how there are specific references to columns like BRTWR (gross value), NETWR (net value) and MATNR (material) without these having been specified to LlamaIndex in the form of an upfront table description. I attribute this to the fact that the EKPO table is a generic table existing in SAP systems for decades, which is documented throughout the web and has most likely been part of the training set. Note that the setup is using gpt3.5-turbo-0613 by default, which is apparently already good enough for this type of code generation. No need to move to the more complex and costly GPT-4!

Analysis dashboard

I have used the excellent Streamlit (https://streamlit.io) package for interacting with the LLM and visualizing the results from the database. This is by far the most straightforward and feature-rich frontend package I have come across, perhaps even beating Gradio in shortening your development time.

The dashboard starts with displaying an input box for the natural language query. After inputting the query and retrieving the results from the database, it displays the generated SQL, a table containing the result set and a visualization of the results in a single go. This is all in a handful lines of code.

An example video of the dashboard in action is shown below:

Wrapup

In this blog post I have shown you that querying an SAP S4/HANA system using natural language is very effective, even by using a GPT-3.5 model which is no longer state of the art nowadays. It appears that GPT-3.5 possesses knowledge of specific S4/HANA tables, possibly by having been exposed to online resources documenting these.

The Steamlit package allows you to quickly create good-looking dashboards in only a minimal amount of code, making this a great way to glue together the various components of the solution.